With record numbers of citizens heading to the polls this year, some parliaments have been passing new legislation to reduce the impact of online hate and disinformation. Some of these measures may already be starting to show results.

Extremism and disinformation both existed long before the internet, but the digital age has enabled hate speech to spread further and faster and to target audiences more accurately. The explosive rise in artificial intelligence may well exacerbate the problem.

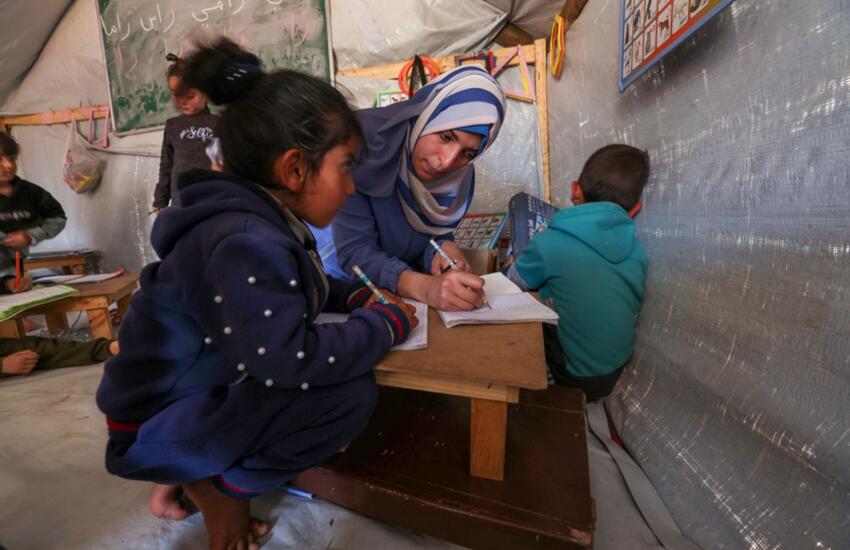

This online hate – which includes abuse, harassment and incitement – undermines democratic politics by perverting debate, provoking violence and discouraging many people from engaging with politics. It fuelled the murder of British MP Jo Cox and the storming of the US Capitol. The IPU reported about its impact on recent elections, particularly the gender-based online violence that women politicians can face.

Serious about compliance

New legislation – such as the EU’s Digital Services Act – may be having an impact already, however. The Act requires digital platforms to prevent hateful or abusive content, for example placing heavier requirements on large platforms with more than 45 million monthly users. By May 2024, more than 20 platforms had joined the list of large platforms, including Bing, Facebook, Google, Instagram, TikTok, X (formerly Twitter), and YouTube.

The Act requires platforms to enable the immediate identification and removal of content that is abusive or illegal. It also demands more transparency on the platforms’ approach to misinformation and propaganda. For companies that fail to comply, the fines could be worth as much as 6% of their global turnover.

Ahead of the European parliamentary elections, which took place on 6-9 June 2024, Brussels also introduced new election guidelines requiring the platforms to establish dedicated teams for tackling hate and disinformation and to work closely with cybersecurity agents across the European Union.

And Europe has shown that it is serious about compliance. In April 2024, the European Commission opened formal proceedings to determine whether Meta – which owns Facebook and Instagram – had breached the Act.

The UK’s 2023 Online Safety Act also targets online hate. Considered to be one of the broadest and strictest legislative frameworks for the internet, its key goal is to protect online users, especially children, by making social media companies and search services responsible for user safety. Companies are legally required to act against a range of illegal content, including fraud, incitements to violence, and abusive or hateful content.

In a recent media interview, an official from the UK’s newly empowered regulator, Ofcom, said that work was being done with individual companies to ensure compliance as soon as possible.

Looking ahead

Despite the fears ahead of the European parliamentary elections, social media appears to have been relatively free from online hate and disinformation. That suggests an early victory for the Digital Services Act, even if online hate remains a serious threat.

Political extremists, troll farms and fraudulent individuals still have powerful incentives to continue spreading online hate and – powered by artificial intelligence – may find new ways around the existing legislation.

Meanwhile, the Centre for Countering Digital Hate, an NGO set up in 2017, says that not only do online hate and disinformation cause deep and widespread harm, but tech companies make money from it. “We need to reset our relationship with technology companies and collectively legislate to address the systems that amplify hate and dangerous misinformation around the globe,” the organization says.

The IPU itself is preparing a forthcoming resolution for its 180 Member Parliaments, The impact of artificial intelligence on democracy, human rights and the rule of law, which will set out principles and guidance for parliamentary action on the regulatory framework for AI.

The resolution is expected to be adopted at the 149th IPU Assembly, which will take place from 13 to 17 October 2024 in Geneva.